If you were to ask a lay person on the street what they thought a supercomputer was, you’d probably get a large percentage citing examples from popular movies – and usually examples with a nefarious reputation. From HAL 9000 (2001: A Space Odyssey) to iRobot’s VIKI and even The Terminator’s Skynet, pop culture often references supercomputers as sentient systems that have evolved and turned against humanity.

Tell that to researchers at Lawrence Livermore National Laboratory, or the National Weather Service, and they’d laugh you out of the room. The truth is that supercomputers today are far from self-aware, and the only AI in use is essentially an overblown search bar that is scanning very large data sets.

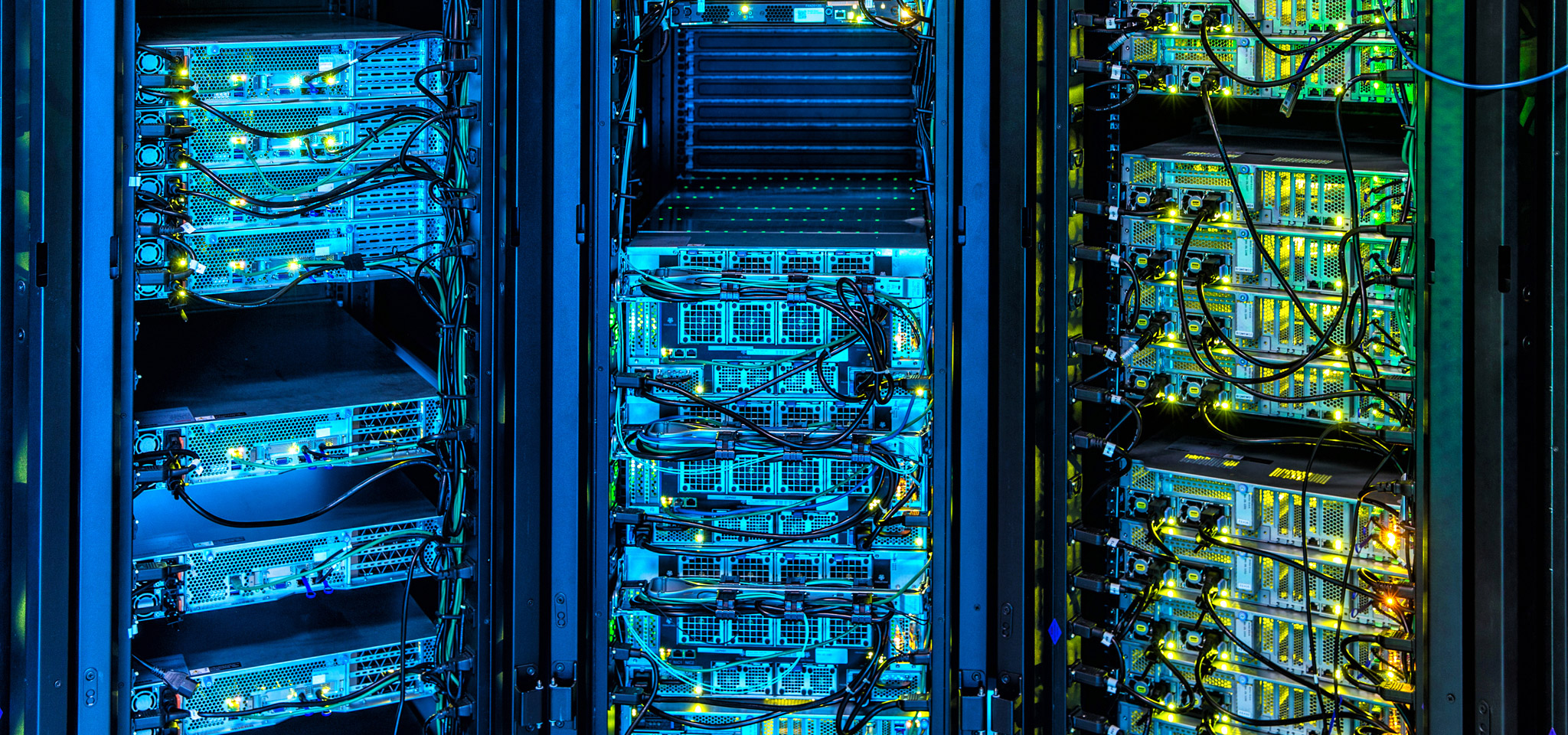

Today, supercomputers are powering a multitude of applications that are at the forefront of progress: from oil and gas exploration to weather predictions, financial markets to developing new technologies. Supercomputers are the Lamborghini or Bugatti of the computing world and, at Kingston, we pay a lot of attention to the advancements that are pushing the computing boundaries. From DRAM utilisation and tuning, to firmware advancements in managing storage arrays, to the emphasis on consistency of transfer and latency speeds instead of peak values, our technologies are deeply influenced by the bleeding edge of supercomputing.

Similarly, there are a lot of things that cloud and on-premise data centre managers can learn from supercomputing when it comes to designing and managing their infrastructures, as well as how best to select the components that will be ready for future advancements without huge overhauls.